Numberly creates and hosts thousands of websites and web facing APIs developed by more than a hundred developers.

Representing more than 4000 Gitlab projects, these interfaces and endpoints become mission critical parts of our clients businesses once in production.

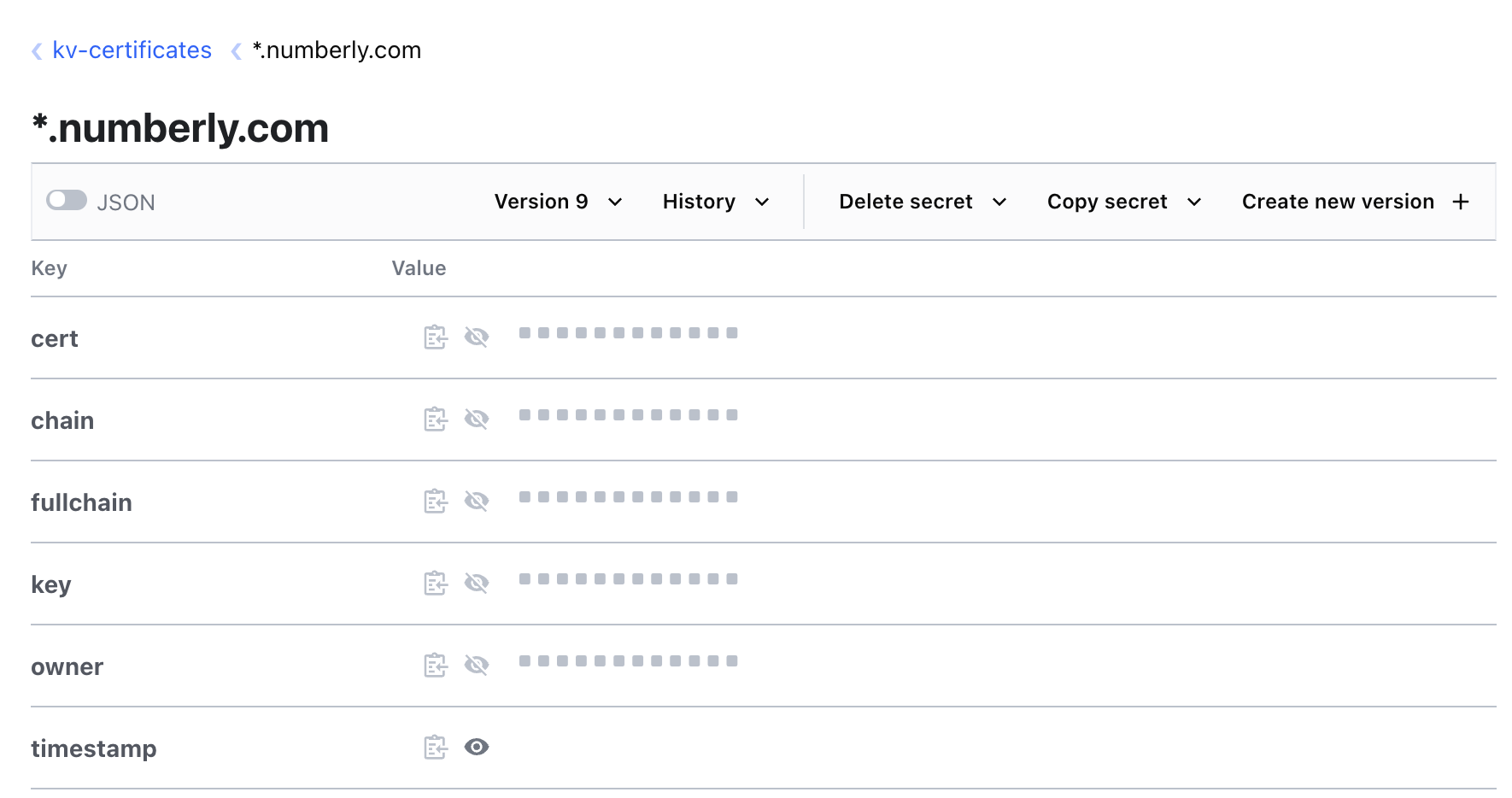

It’s been a long time necessity for us to protect the access to those resources, starting with on the wire encryption and HTTPS.

Over the years, we faced many of the scaling challenges you need to solve to overcome both the friction that SSL certificate management represent to your production delivery and the automation for their life cycle and maintenance. In many ways, our challenges are similar to the ones that full scale hosters such as OVH have been facing.

There’s a big gap between managing a dozen SSL certificates and managing thousands: one can’t just optimize the time humans spend on creating/installing/monitoring/renewing them, at some point you need to just make those operations transparent to your developers and infrastructure to scale.

We wrote this series of articles to share the 20 years journey and experience we have in managing secrets. Starting from the SSL certificate management at scale to generalizing secret management to teams and applications.